Share this

Continuous Performance Testing – What prevents you from doing it?

by Till Neunast on 26 July 2017

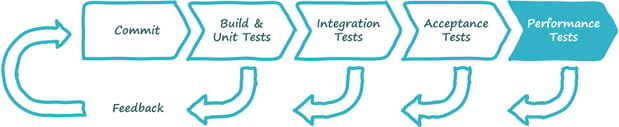

Continuous Testing is automated testing with fast feedback on build quality via integration in the Continuous Delivery process. This sounds like a nice concept, but does performance testing really fit into that and how?

Performance testing and the DevOps and Agile mindset

To put you in the right mood, let me start off with a performance testing centric interpretation of a few DevOps and Agile mantras:

- Deliver better software faster: Performance testing traditionally happens late in the process but in a DevOps or Agile world needs to provide value sooner by finding issues earlier when they are not as costly to fix. As soon as possible could mean with every single code change.

- Fail fast: The sooner you detect a performance issue, the quicker it is to resolve as it can be pinpointed much more easily (ideally caused by that single change). Failing a build based on poor performance also saves time by not accepting it into the next testing phase (e.g. manual).

- Automate everything: A script that takes care of test data setup frees up a human mind to do more intelligent things, like problem analysis.

- Eliminate waste: Manual tasks are a form of waste.

- Ready to ship at the end of each iteration: “Done” should include testing for non-functional requirements like performance, stability, capacity etc. (But can be deferred to dedicated stories or hardening iterations.)

My vision for continuous performance testing

My vision is simple: Create a Continuous Delivery pipeline (or part thereof) which stops a build when performance degradation occurs, just like for functional regression. This can then be connected to the existing pipeline that builds, deploys, and runs automated functional tests, etc. It takes care of all the tedious tasks that precede an actual performance test run.

There are some limitations and caveats of course: This approach lends itself better to performance regression testing than testing new features. It may be better to measure against baseline results (benchmarking) rather than SLAs or NFRs so as to actually detect regression. This is not supposed to be a replacement for a full end-to-end load testing phase, but meant to avoid nasty surprises in that final phase.

So why aren’t you doing continuous performance testing?

Your mileage may vary but the challenges will generally be in the following categories:

- Environments

- Deployments

- Test data

- Changing functionality

- Changing workload

- Results analysis

Let’s elaborate on each of these challenges a little further…

1. “I don’t have a production-like environment for exclusive use!”

Ok, maybe you are part of the unlucky bunch of people who don’t have “infrastructure as code” yet and cannot simply spin up a few servers on demand. You may not even have a dedicated pre-production environment for performance testing.

However, you can still make do with what is there. If your environment is lower-spec than production you may have to scale down the workload. You won’t be able to measure against real SLAs but you can still do trending comparisons between previous and current builds.

If you share the environment with others, you may still be able to find a time window where you can schedule your performance test. You may have to manage test data a bit more carefully though, for which setup and teardown scripts may help.

2. “I have to wait for someone to deploy the build before I can start any testing!”

Admittedly, deployment processes seem to get more and more complex, making it harder to develop automated deployment scripts. However, the gains will be manifold when the complex manual process can be replaced across many environments.

Sure, you may not have rollback scripts for the database, because it might just be too hard, but then start thinking about if you really need to roll back in your environment or for what purpose (fail forward!).

Even if the whole process cannot be scripted at once, at least make a start.

3. “But I have to inject huge amounts of test data before I can run meaningful tests!”

True, often there’s little point performance testing on an empty database. But remember, we are interested in performance regression, so even if you have streamlined, automated test data generation/injection scripts that may still require hours to synthesize production-like data volumes, those can be trimmed down for trending tests.

4. “What about new features? They cannot be tested continuously!”

Sure, new features need to be performance tested too. But they have all the focus anyway, so don’t forget the performance regression testing of “old” features. Also, new features are only new once – the first time they are delivered. By the way, functional test automation has the same type of problem.

5. “There’s a new feature that will totally change the workload, so a trending comparison will be meaningless!”

Be agile and update your workload model as soon as possible. Of course this will affect the ability to compare with previous results. Divide your test scenarios so they can still be compared:

- Take a baseline on the old version, without the new feature

- Run a comparison test on the new version, without the new feature - to detect regression introduced in the new version

- Run a comparison test on the new version, with the new feature - to detect impact of feature and/or workload changes.

Each of those can be integrated with the automated approach.

6. “It takes hours of analysis for a human performance engineer to assess whether NFRs are met!”

Good news: You have spent those hours before. Assuming you have a tuned system that performs well, you know how it behaves in terms of typical response times and resource consumption metrics. What you should be worried about is detecting a deviation from that good behaviour, e.g. a sudden increase in session size or database response times. This can be done by aggregating the metrics of interest and comparing against the ones from a previous or known good build. Fail the build if you measure anything suspicious. Then a human can start to investigate.

Shift-left your performance tests

In this fast changing world performance testing cannot afford to lag behind. Ironically, the technical challenges can often be solved easier than making that paradigm shift. So, start today and shift-left your performance tests! Let us know in the comments if you have tried continuous performance testing or similar approaches. What did you find worked well and what challenges were difficult to overcome?

Share this

- Agile Development (153)

- Software Development (126)

- Agile (76)

- Scrum (66)

- Application Lifecycle Management (50)

- Capability Development (47)

- Business Analysis (46)

- DevOps (43)

- IT Professional (42)

- Equinox IT News (41)

- Agile Transformation (38)

- IT Consulting (38)

- Knowledge Sharing (36)

- Lean Software Development (35)

- Requirements (35)

- Strategic Planning (35)

- Solution Architecture (34)

- Digital Disruption (32)

- IT Project (31)

- International Leaders (31)

- Digital Transformation (26)

- Project Management (26)

- Cloud (25)

- Azure DevOps (23)

- Coaching (23)

- IT Governance (23)

- System Performance (23)

- Change Management (20)

- Innovation (20)

- MIT Sloan CISR (15)

- Client Briefing Events (13)

- Architecture (12)

- Working from Home (12)

- IT Services (10)

- Data Visualisation (9)

- Kanban (9)

- People (9)

- Business Architecture (8)

- Communities of Practice (8)

- Continuous Integration (7)

- Business Case (4)

- Enterprise Analysis (4)

- Angular UIs (3)

- Business Rules (3)

- Java Development (3)

- Lean Startup (3)

- Satir Change Model (3)

- API (2)

- Automation (2)

- GitHub (2)

- Scaling (2)

- Toggles (2)

- .Net Core (1)

- Diversity (1)

- Security (1)

- Testing (1)

- February 2024 (3)

- January 2024 (1)

- September 2023 (2)

- July 2023 (3)

- August 2022 (4)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (1)

- March 2021 (1)

- February 2021 (2)

- November 2020 (2)

- September 2020 (1)

- July 2020 (1)

- June 2020 (3)

- May 2020 (3)

- April 2020 (2)

- March 2020 (8)

- February 2020 (1)

- November 2019 (1)

- August 2019 (1)

- July 2019 (2)

- June 2019 (2)

- April 2019 (3)

- March 2019 (2)

- February 2019 (1)

- December 2018 (3)

- November 2018 (3)

- October 2018 (3)

- September 2018 (1)

- August 2018 (4)

- July 2018 (5)

- June 2018 (1)

- May 2018 (1)

- April 2018 (5)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (2)

- November 2017 (3)

- October 2017 (4)

- September 2017 (5)

- August 2017 (3)

- July 2017 (3)

- June 2017 (1)

- May 2017 (1)

- March 2017 (1)

- February 2017 (3)

- January 2017 (1)

- November 2016 (1)

- October 2016 (6)

- September 2016 (1)

- August 2016 (5)

- July 2016 (3)

- June 2016 (4)

- May 2016 (7)

- April 2016 (13)

- March 2016 (8)

- February 2016 (8)

- January 2016 (7)

- December 2015 (9)

- November 2015 (12)

- October 2015 (4)

- September 2015 (2)

- August 2015 (3)

- July 2015 (8)

- June 2015 (7)

- April 2015 (2)

- March 2015 (3)

- February 2015 (2)

- December 2014 (4)

- September 2014 (2)

- July 2014 (1)

- June 2014 (2)

- May 2014 (9)

- April 2014 (1)

- March 2014 (2)

- February 2014 (2)

- December 2013 (1)

- November 2013 (2)

- October 2013 (3)

- September 2013 (2)

- August 2013 (6)

- July 2013 (2)

- June 2013 (1)

- May 2013 (4)

- April 2013 (5)

- March 2013 (2)

- February 2013 (2)

- January 2013 (2)

- December 2012 (1)

- November 2012 (1)

- October 2012 (2)

- September 2012 (3)

- August 2012 (3)

- July 2012 (3)

- June 2012 (1)

- May 2012 (1)

- April 2012 (1)

- February 2012 (1)

- December 2011 (4)

- November 2011 (2)

- October 2011 (2)

- September 2011 (4)

- August 2011 (2)

- July 2011 (3)

- June 2011 (4)

- May 2011 (2)

- April 2011 (2)

- March 2011 (3)

- February 2011 (1)

- January 2011 (4)

- December 2010 (2)

- November 2010 (3)

- October 2010 (1)

- September 2010 (1)

- May 2010 (1)

- February 2010 (1)

- July 2009 (1)

- April 2009 (1)

- October 2008 (1)