Share this

Part four: Gotcha! Pinpointing a browser performance problem under Citrix

by Ben Rowan on 08 March 2016

In this series of posts we started by troubleshooting a hard to find software performance problem and in part two began following the trail to performance problems in front of the webserver. Then in part three we narrowed in on the problem with a some confidence that Citrix was a contributing factor. A meeting was booked to show what we’d found and I was confident the problem wouldn’t appear, but it did…

There’s nothing quite like a demonstration completely contradicting what you expected to happen. The information learned in that moment was pivotal, but it would have been nice to find it under different circumstances! The characteristics of the performance problem were becoming clearer: We were now looking at an issue which appeared when Internet Explorer was being used remotely – either via Remote Desktop or from within a Citrix environment.

Profiling

Our client uses Internet Explorer 9. IE9 has a set of developer tools built in which, among other things, can profile the performance of JavaScript. We decided to see what difference remotely accessing a browser made to the performance of JavaScript, and were surprised with the results.

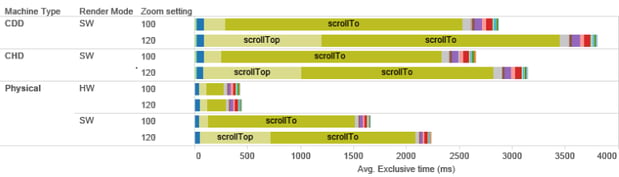

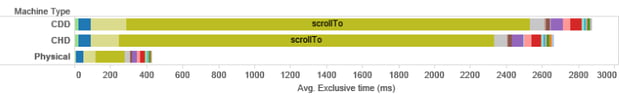

We decided on a few scenarios to profile, and set about recording several iterations from a physical PC, a CHD session and a CDD session to measure the differences. The results are quite striking: This chart shows the “Exclusive Time” for the top 20 JavaScript functions during a particular navigation event within the application. We can clearly see that the scrollTo function performs significantly worse via CDD or CHD than it does via a physical PC.

Patterns similar to this were repeated throughout the system. Very interesting, but we did manage to experience slow performance on a physical PC when the machine was accessed via Remote Desktop. Why?

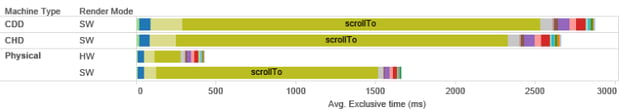

Some further reading about the way Internet Explorer behaves in remote environments pointed out that when accessed remotely, IE defaults to using software rendering. Some further profiling using both hardware and software rendering modes proves this point:

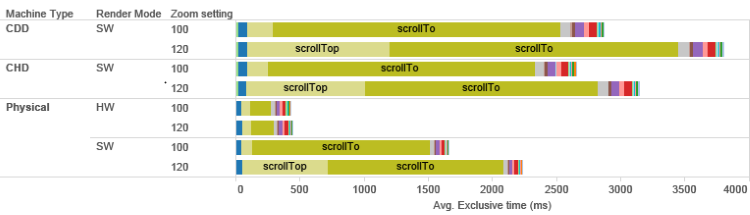

This was excellent news: From a scope of “there’s a problem, please find it” we had narrowed the issue down to inefficiencies in the execution of a specific JavaScript function when called in software rendering mode. During profiling, we also discovered that performance was much better with the IE zoom function set to 100% than it was when set to 120%:

Knowing what the problem is doesn’t automatically fix it, of course, and efforts to address this issue are presently ongoing:

- The application developer has released several patches which minimise the number of times scrollTo is called

- The possibility of installing hardware GPU capability in the Citrix environment is being looked at

- Our profiling suggested several things users could do to improve their performance, like always using their browser on 100% zoom where possible

- Further profiling on Internet Explorer 11 suggests better performance is likely following a browser upgrade, though some of the gains may be clawed back by other inefficiencies. Expect another blog post on this in future!

What did we learn?

This engagement taught us some important lessons about our testing methodology: By applying load at the HTTP level, the standard suite of tests did identify the performance changes in the application, and did confirm that the application remained stable and responsive under load. These conclusions were accurate, as the production system behaved in much the same way as the test system. And yet, users still experienced poor performance. How could we do better next time?

The HTTP injection approach cannot identify performance problems in client-side code. In an attempt to mitigate this risk, manual testers perform transactions while the system is under load from the test rig, and report on their experience. In most cases that is sufficient, but in this case didn’t provide the information we needed. There are two key reasons for this:

- Manual testers accessed the system via physical PCs, and the problems only become apparent when the browser is using software rendering mode.

- Manual testers are asked for a subjective experience report, rather than being able to carefully measure statistics about the user experience.

To close these gaps:

- Have manual testers perform their transactions from a user-like platform (Citrix in this case)

- Use the developer tools in Internet Explorer to precisely measure the performance of client-side JavaScript.

These two measures now form part of our ongoing testing process, and we should catch any issues similar to this one in the future.

Thanks for reading along. I hope you’ve enjoyed the journey.

Share this

- Agile Development (153)

- Software Development (126)

- Agile (76)

- Scrum (66)

- Application Lifecycle Management (50)

- Capability Development (47)

- Business Analysis (46)

- DevOps (43)

- IT Professional (42)

- Equinox IT News (41)

- Agile Transformation (38)

- IT Consulting (38)

- Knowledge Sharing (36)

- Lean Software Development (35)

- Requirements (35)

- Strategic Planning (35)

- Solution Architecture (34)

- Digital Disruption (32)

- IT Project (31)

- International Leaders (31)

- Digital Transformation (26)

- Project Management (26)

- Cloud (25)

- Azure DevOps (23)

- Coaching (23)

- IT Governance (23)

- System Performance (23)

- Change Management (20)

- Innovation (20)

- MIT Sloan CISR (15)

- Client Briefing Events (13)

- Architecture (12)

- Working from Home (12)

- IT Services (10)

- Data Visualisation (9)

- Kanban (9)

- People (9)

- Business Architecture (8)

- Communities of Practice (8)

- Continuous Integration (7)

- Business Case (4)

- Enterprise Analysis (4)

- Angular UIs (3)

- Business Rules (3)

- Java Development (3)

- Lean Startup (3)

- Satir Change Model (3)

- API (2)

- Automation (2)

- GitHub (2)

- Scaling (2)

- Toggles (2)

- .Net Core (1)

- Diversity (1)

- Security (1)

- Testing (1)

- February 2024 (3)

- January 2024 (1)

- September 2023 (2)

- July 2023 (3)

- August 2022 (4)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (1)

- March 2021 (1)

- February 2021 (2)

- November 2020 (2)

- September 2020 (1)

- July 2020 (1)

- June 2020 (3)

- May 2020 (3)

- April 2020 (2)

- March 2020 (8)

- February 2020 (1)

- November 2019 (1)

- August 2019 (1)

- July 2019 (2)

- June 2019 (2)

- April 2019 (3)

- March 2019 (2)

- February 2019 (1)

- December 2018 (3)

- November 2018 (3)

- October 2018 (3)

- September 2018 (1)

- August 2018 (4)

- July 2018 (5)

- June 2018 (1)

- May 2018 (1)

- April 2018 (5)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (2)

- November 2017 (3)

- October 2017 (4)

- September 2017 (5)

- August 2017 (3)

- July 2017 (3)

- June 2017 (1)

- May 2017 (1)

- March 2017 (1)

- February 2017 (3)

- January 2017 (1)

- November 2016 (1)

- October 2016 (6)

- September 2016 (1)

- August 2016 (5)

- July 2016 (3)

- June 2016 (4)

- May 2016 (7)

- April 2016 (13)

- March 2016 (8)

- February 2016 (8)

- January 2016 (7)

- December 2015 (9)

- November 2015 (12)

- October 2015 (4)

- September 2015 (2)

- August 2015 (3)

- July 2015 (8)

- June 2015 (7)

- April 2015 (2)

- March 2015 (3)

- February 2015 (2)

- December 2014 (4)

- September 2014 (2)

- July 2014 (1)

- June 2014 (2)

- May 2014 (9)

- April 2014 (1)

- March 2014 (2)

- February 2014 (2)

- December 2013 (1)

- November 2013 (2)

- October 2013 (3)

- September 2013 (2)

- August 2013 (6)

- July 2013 (2)

- June 2013 (1)

- May 2013 (4)

- April 2013 (5)

- March 2013 (2)

- February 2013 (2)

- January 2013 (2)

- December 2012 (1)

- November 2012 (1)

- October 2012 (2)

- September 2012 (3)

- August 2012 (3)

- July 2012 (3)

- June 2012 (1)

- May 2012 (1)

- April 2012 (1)

- February 2012 (1)

- December 2011 (4)

- November 2011 (2)

- October 2011 (2)

- September 2011 (4)

- August 2011 (2)

- July 2011 (3)

- June 2011 (4)

- May 2011 (2)

- April 2011 (2)

- March 2011 (3)

- February 2011 (1)

- January 2011 (4)

- December 2010 (2)

- November 2010 (3)

- October 2010 (1)

- September 2010 (1)

- May 2010 (1)

- February 2010 (1)

- July 2009 (1)

- April 2009 (1)

- October 2008 (1)