Share this

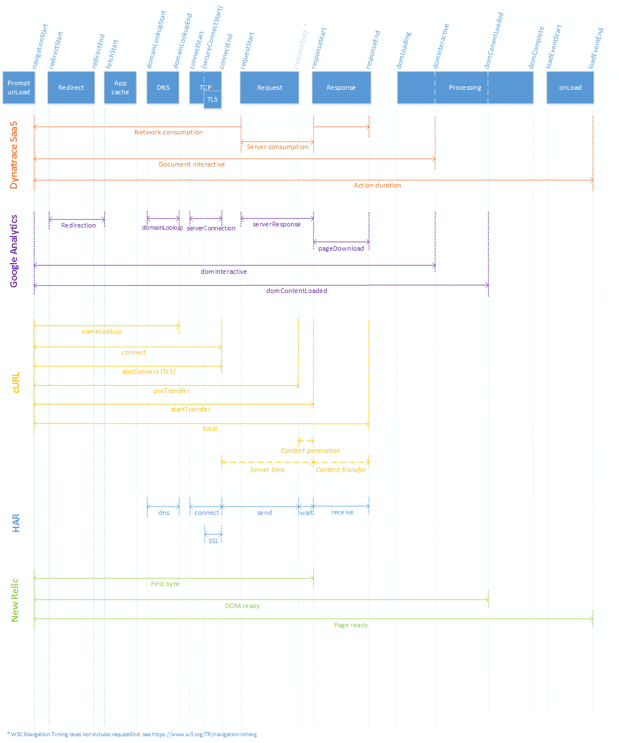

Website performance timing points – Comparing Google Analytics, Dynatrace SaaS, cURL, HAR and New Relic

by Jeremy Cook on 25 October 2016

As more software and services are built to quantify website performance, there is an increasing requirement to try and compare those different sources. Sometimes it is an easy apples-to-apples, such as a common underlying API like W3C Navigation Timing. Mostly it is not.

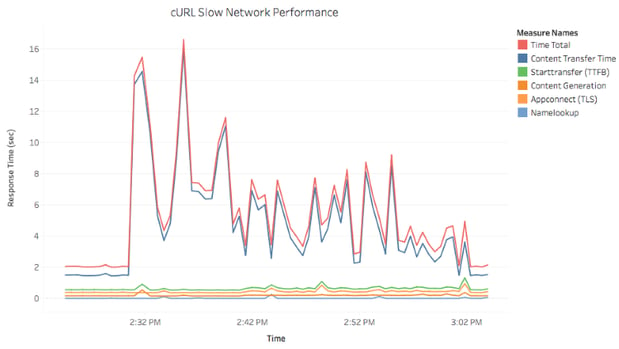

Recently we had a client whose SaaS exhibited large performance degradation for minutes at a time. They already had Google Analytics, but the SaaS provider allowed us to insert the agent-less Dynatrace SaaS javascript snippet into the HTML to monitor real user interactions. Due to the network specific nature of this particular problem, we also ran continuous cURL requests from several locations.

Taking these three sources, similar information is provided with not only different terminology but different methods of timing. To complicate things a little, some interpolation of timing points can be required to get useful comparisons.

So what I’ve done is attempt to map Google Analytics, cURL, and Dynatrace SaaS, HAR, and New Relic events against the W3C Navigation Timing API. Hopefully others will be added later. I’ve used the W3C Navigation Timing API as the base because it provides a standardised javascript based API with well understood timing attributes.

This is a work in progress so please provide any feedback if you disagree.

A reference diagram for Google Analytics, Dynatrace SaaS, cURL, HAR, New Relic using the Navigation Timing API

View larger version of the reference diagram image

There are many technical notes behind this diagram that can cause important changes to these timing points, primarily when ajax calls are involved.

Dynatrace SaaS

Dynatrace is an application performance monitoring solution, either managed on site or as a cloud based SaaS, the latter we’ll discuss here. It has a lot of hidden depth to it, but one of the more obvious interfaces is that of “Performance Analysis’ of the ‘Slowest 10% of actions” - an example is shown below.

Direct from a Dynatrace support request these contributors are defined as:

- Action duration: (loadEventEnd or endTimeOfLastXHR) - actionStart

- actionStart = navigationStart for pages loads or "click time" for XHR actions and user navigations like a button click or click on a link

- endTimeOfLastXHR = if XHR calls are triggered during the process and are not finished before loadEventEnd then the end time of the last XHR call is used instead of the loadEventEnd time

- Server consumption: responseStart - requestStart

- Network consumption: (requestStart - actionStart) + (responseEnd - responseStart)

- Document interactive time: must return the time immediately before the user agent sets the current document readiness to "interactive".

Google Analytics

The Google Analytics Site Speed metrics represent Navigation Timing API events:

- pageLoadTime = loadEventStart - navigationStart

- domainLookupTime = domainLookupEnd - domainLookupStart

- serverConnectionTime = connectEnd - connectStart

- serverResponseTime = responseStart - requestStart

- pageDownloadTime = responseEnd - responseStart

- redirectionTime = fetchStart – navigationStart

- domInteractiveTime = domInteractive - navigationStart

- domContentLoadedTime = domContentLoadedEventEnd - navigationStart

New Relic

The New Relic browser page load timing feature is based directly on the Navigation Timing API.

- Navigation start = navigationStart

- First byte = responseStart

- DOM ready = DOMContentLoadedEventEnd

- Page ready = loadEventEnd

cURL

This command line utility provides all timing points from the start of the libcurl request. In this respect it is often the hardest to compare without some calculations.

- namelookup = from the start until the name resolving was completed.

- connect = from the start until the TCP connect to the remote host (or proxy) was completed.

- appconnect = from the start until the TLS connect/handshake to the remote host was completed.

- pretransfer = from the start until the file transfer was just about to begin. This includes all pre-transfer commands and negotiations that are specific to the particular protocol involved.

- starttransfer = from the start until the first byte was just about to be transferred. This includes pretransfer and also the time the server needed to calculate the result.

- redirect = all redirection steps including name lookup, connect, pretransfer and transfer before the final transaction was started. If this is 0 there were no redirects.

- total = the total time, in seconds, that the full operation lasted. The time will be displayed with millisecond resolution.

Additionally, these may be useful:

- TCP connection time = connect – namelookup

- Content generation = startTransfer – preTransfer

- Content transfer = total – startTransfer

- Server time = startTransfer - appConnect

If you don’t already know the trick to obtaining cURL’s timing metrics, create a text file to provide output formatting and provide that with curl -w filename.txt. For example, I use this pattern called curl-format-csv.txt

,%{remote_ip},%{http_code},%{ssl_verify_result},%{size_download},%{time_namelookup},%{time_connect},%{time_appconnect},%{time_pretransfer},%{time_redirect},%{time_starttransfer},%{time_total}\n

And run it with a basic shell script like the following to provide a timestamp and the host it runs on

#!/bin/bash# Wild Strait, Jeremy 23/06/2016

myHost=`hostname -s`myFile="./wildstrait_curl_examplecom_${myHost}.csv" myRes="http://example.com/largefile.jpg"

while true; do myDate=`date "+%Y-%m-%d,%H:%M:%S"` myStats=`curl --connect-timeout 20 -w "@curl-format-csv.txt" -o /dev/null -k -s ${myRes} &` echo "${myDate},${myHost}${myStats}" >> $myFile #echo "Took cURL reading at ${myDate}" sleep 30 done

That will continue running cURL indefinitely. Use nohup to allow it to run after you exit the terminal. You will need to kill the process to stop it.

HAR

The HTTP Archive is a JSON file that records web page loading information. HAR files can be exported from almost all the modern browsers. More interestingly from a scriptable and repeatable software performance testing point of view, the headless WebKit browser phantomjs can output HAR files using one of the example javascript files that comes with it (/examples/netsniff.js).

I normally convert the HAR to csv on the fly with a python script but the better way to do this would be to write the correct javascript to output csv directly. But the HAR to csv python script can summarise timings for the whole page including loaded resources, rather than having separate timings for every single resource, which makes initial comparative performance analysis much easier across time or various web sites.

Once the data is in csv we use Tableau to analyse. As of Tableau 10.1 we can now import the HAR JSON directly.

There are two sections in the HAR archive that we’re most interested in when comparing against the Navigation Timing API. These are:

pageTimings

- onContentLoad - Content of the page loaded. Number of milliseconds since page load started (page.startedDateTime). Use -1 if the timing does not apply to the current request.

- onLoad - Page is loaded (onLoad event fired). Number of milliseconds since page load started (page.startedDateTime). Use -1 if the timing does not apply to the current request.

timings

- blocked - Time spent in a queue waiting for a network connection. Use -1 if the timing does not apply to the current request.

- dns - DNS resolution time. The time required to resolve a host name. Use -1 if the timing does not apply to the current request.

- connect - Time required to create TCP connection. Use -1 if the timing does not apply to the current request.

- send - Time required to send HTTP request to the server.

- wait - Waiting for a response from the server.

- receive - Time required to read entire response from the server (or cache).

- ssl (new in 1.2) - Time required for SSL/TLS negotiation. If this field is defined then the time is also included in the connect field (to ensure backward compatibility with HAR 1.1). Use -1 if the timing does not apply to the current request.

Tableau

At Wild Strait we use the incredible Tableau for data analysis. You should be able to use the above information to create calculated fields. For Google Analytics, you will need to also make use of the Useful GA Measures page to correctly account for their sampling size. When you are choosing your measures and dimensions in the Tableau GA Connector, ensure you add Speed Metric Counts at the very least.

Share this

- Agile Development (153)

- Software Development (126)

- Agile (76)

- Scrum (66)

- Application Lifecycle Management (50)

- Capability Development (47)

- Business Analysis (46)

- DevOps (43)

- IT Professional (42)

- Equinox IT News (41)

- Agile Transformation (38)

- IT Consulting (38)

- Knowledge Sharing (36)

- Lean Software Development (35)

- Requirements (35)

- Strategic Planning (35)

- Solution Architecture (34)

- Digital Disruption (32)

- IT Project (31)

- International Leaders (31)

- Digital Transformation (26)

- Project Management (26)

- Cloud (25)

- Azure DevOps (23)

- Coaching (23)

- IT Governance (23)

- System Performance (23)

- Change Management (20)

- Innovation (20)

- MIT Sloan CISR (15)

- Client Briefing Events (13)

- Architecture (12)

- Working from Home (12)

- IT Services (10)

- Data Visualisation (9)

- Kanban (9)

- People (9)

- Business Architecture (8)

- Communities of Practice (8)

- Continuous Integration (7)

- Business Case (4)

- Enterprise Analysis (4)

- Angular UIs (3)

- Business Rules (3)

- Java Development (3)

- Lean Startup (3)

- Satir Change Model (3)

- API (2)

- Automation (2)

- GitHub (2)

- Scaling (2)

- Toggles (2)

- .Net Core (1)

- Diversity (1)

- Security (1)

- Testing (1)

- February 2024 (3)

- January 2024 (1)

- September 2023 (2)

- July 2023 (3)

- August 2022 (4)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (1)

- March 2021 (1)

- February 2021 (2)

- November 2020 (2)

- September 2020 (1)

- July 2020 (1)

- June 2020 (3)

- May 2020 (3)

- April 2020 (2)

- March 2020 (8)

- February 2020 (1)

- November 2019 (1)

- August 2019 (1)

- July 2019 (2)

- June 2019 (2)

- April 2019 (3)

- March 2019 (2)

- February 2019 (1)

- December 2018 (3)

- November 2018 (3)

- October 2018 (3)

- September 2018 (1)

- August 2018 (4)

- July 2018 (5)

- June 2018 (1)

- May 2018 (1)

- April 2018 (5)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (2)

- November 2017 (3)

- October 2017 (4)

- September 2017 (5)

- August 2017 (3)

- July 2017 (3)

- June 2017 (1)

- May 2017 (1)

- March 2017 (1)

- February 2017 (3)

- January 2017 (1)

- November 2016 (1)

- October 2016 (6)

- September 2016 (1)

- August 2016 (5)

- July 2016 (3)

- June 2016 (4)

- May 2016 (7)

- April 2016 (13)

- March 2016 (8)

- February 2016 (8)

- January 2016 (7)

- December 2015 (9)

- November 2015 (12)

- October 2015 (4)

- September 2015 (2)

- August 2015 (3)

- July 2015 (8)

- June 2015 (7)

- April 2015 (2)

- March 2015 (3)

- February 2015 (2)

- December 2014 (4)

- September 2014 (2)

- July 2014 (1)

- June 2014 (2)

- May 2014 (9)

- April 2014 (1)

- March 2014 (2)

- February 2014 (2)

- December 2013 (1)

- November 2013 (2)

- October 2013 (3)

- September 2013 (2)

- August 2013 (6)

- July 2013 (2)

- June 2013 (1)

- May 2013 (4)

- April 2013 (5)

- March 2013 (2)

- February 2013 (2)

- January 2013 (2)

- December 2012 (1)

- November 2012 (1)

- October 2012 (2)

- September 2012 (3)

- August 2012 (3)

- July 2012 (3)

- June 2012 (1)

- May 2012 (1)

- April 2012 (1)

- February 2012 (1)

- December 2011 (4)

- November 2011 (2)

- October 2011 (2)

- September 2011 (4)

- August 2011 (2)

- July 2011 (3)

- June 2011 (4)

- May 2011 (2)

- April 2011 (2)

- March 2011 (3)

- February 2011 (1)

- January 2011 (4)

- December 2010 (2)

- November 2010 (3)

- October 2010 (1)

- September 2010 (1)

- May 2010 (1)

- February 2010 (1)

- July 2009 (1)

- April 2009 (1)

- October 2008 (1)