Share this

Presenting on Serverless Computing - your next step in Cloud

by Carl Douglas on 24 July 2018

Cloud computing continues to evolve with many of the major providers now offering Serverless Computing, also often referred to as Functions-as-a-Service. To help make sense of how Serverless computing works and what it offers, I presented an overview to Equinox IT clients and team members. Based on experience so far with Cloud deployed applications I'm excited by the potential use-cases and the advantages that Serverless computing has to offer.

What is Serverless Computing?

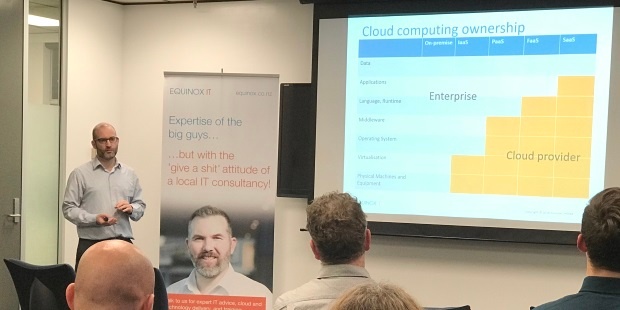

The term Serverless Computing does not entail the disappearance of server infrastructure altogether. Rather, the premise is that developers, operators and owners of Cloud-based applications no longer need to think about servers. Serverless computing involves full automation of the provisioning and scaling of underlying infrastructure, involving virtualised machines, Operating Systems, containers and container orchestration. More than commoditisation of infrastructure it is near complete automation of infrastructure.

Another term often used when talking about Serverless Computing is Functions-as-a-Service (FaaS). FaaS can be viewed as a step in the evolution of Platform-of-a-Service, adding a language runtime including Python, Java, JavaScript, C#, Go, and event-driven invocation of code written in those programming languages. FaaS is a meaningful term in the sense that an application can be constructed out of a collection of functions and the unit of deployment can be as small as a single function.

One of the characteristics of Serverless Computing is that as far as we are concerned, there are no long-lived processes. A Serverless or Cloud Function is invoked by an event, the function handling that event then returns without effectively accumulating any local state. Statelessness in this context covers both memory and disk, although there is typically temporary disk space available, it may not persist between invocations of a function. Of course, a function may use another service such as a database connection to update or retrieve application data.

Other characteristics include automated provisioning of infrastructure, and automated scaling of infrastructure based on demand. This means that as demand is increased by arrival of events the underlying infrastructure is scaled outwards to service that load.

As hinted above, given that functions are consumers of events, another characteristic is that Serverless Computing naturally implies an Event-Driven Architecture. Functions may also be producers of events, and so communication between functions and other systems is achieved by patterns of event notification.

Benefits

A primary benefit of Serverless is the ease of deployment of applications, as there is no need to provision or configure applications for specific infrastructure requirements. In fact, frameworks such as serverless.com simplify through tooling and templates for specific providers so that a deployment is as simple as running a single command.

Another significant benefit of Serverless Computing is that providers charge for the invocation of cloud functions and the time that a function is executed for. An unused function has a zero compute cost.

The benefit of having a scalable and available infrastructure means never being under or over capacity in terms of infrastructure. For applications where demand is difficult to forecast this takes the guess work out of capacity planning.

Use cases

A few use cases come to mind now we have some understanding of Serverless and its benefits. For example, it gives us the ability to rapidly build client specific APIs, Back Ends for Front Ends (such as ReSTFUL endpoints), callbacks for SaaS applications, and scheduled jobs that can be triggered by timers.

Wider implications

Serverless Computing is coming of age in the era of Continuous Deployment, and the typical deployment model of Serverless Computing benefits from maturity with Continuous Deployment practices.

Serverless Computing assumes an Event-Driven Architecture. An existing application may not be compatible with this style of architecture, and developers may not be familiar with this.

Serverless represents a paradigm shift, requiring a mindset change much greater than that required by containerization for example.

Providers

There are many providers who offer Serverless Computing: Amazon Web Services Lambda, Microsoft Azure Functions, Google Cloud Functions, IBM OpenWhisk, Fn, Webtask, Spotinst, Kubeless, Alibaba Cloud Function Compute, and many more.

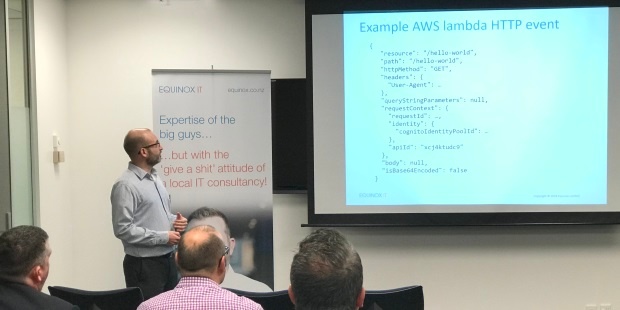

Note that the function signature used by the providers is not universally shared, and so there is not absolute compatibility when moving code from one provider to another. However, the cloudevents initiative aims to standardise the specification of an event data structure. There are also frameworks such as serverless.com that can help generate boilerplate code for some of the providers.

Also serverless.com offer an event gateway that can translate and forward events to specific providers.

Limitations

There are typically default concurrency limits to the scale of Serverless Computing, for example AWS Lambda provides a default of 1000. This can be increased however on request. Allocation of concurrency can be reserved per function, for example setting to a lower number 10 causing throttling to occur on the 11th invocation. This is a partial mitigation for a Denial of Service attack, and also offers some cost control.

There is a default max compute time, on Lambda it is 5 minutes, at which point an executing function may be terminated by the provider.

Key points

Serverless Computing is a milestone in the commoditisation of automated infrastructure. It is well suited to enterprises that have a degree of maturity with Continuous Delivery. It implies an Event-Driven Architecture which means applications may need to be adapted and development teams familiarised with this style of architecture and the relevant integration patterns.

Summary

It would seem prudent to improve on Continuous Delivery practices and consider the impact of integrating legacy applications with other systems in an Event-Driven Architecture style, ahead of transitioning to Serverless Computing to better realise the benefits.

Carl Douglas is a Senior Consultant specialising in mobile and Cloud architecture and applications, based in Wellington.

Share this

- Agile Development (153)

- Software Development (126)

- Agile (76)

- Scrum (66)

- Application Lifecycle Management (50)

- Capability Development (47)

- Business Analysis (46)

- DevOps (43)

- IT Professional (42)

- Equinox IT News (41)

- Agile Transformation (38)

- IT Consulting (38)

- Knowledge Sharing (36)

- Lean Software Development (35)

- Requirements (35)

- Strategic Planning (35)

- Solution Architecture (34)

- Digital Disruption (32)

- IT Project (31)

- International Leaders (31)

- Digital Transformation (26)

- Project Management (26)

- Cloud (25)

- Azure DevOps (23)

- Coaching (23)

- IT Governance (23)

- System Performance (23)

- Change Management (20)

- Innovation (20)

- MIT Sloan CISR (15)

- Client Briefing Events (13)

- Architecture (12)

- Working from Home (12)

- IT Services (10)

- Data Visualisation (9)

- Kanban (9)

- People (9)

- Business Architecture (8)

- Communities of Practice (8)

- Continuous Integration (7)

- Business Case (4)

- Enterprise Analysis (4)

- Angular UIs (3)

- Business Rules (3)

- Java Development (3)

- Lean Startup (3)

- Satir Change Model (3)

- API (2)

- Automation (2)

- GitHub (2)

- Scaling (2)

- Toggles (2)

- .Net Core (1)

- Diversity (1)

- Security (1)

- Testing (1)

- February 2024 (3)

- January 2024 (1)

- September 2023 (2)

- July 2023 (3)

- August 2022 (4)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (1)

- March 2021 (1)

- February 2021 (2)

- November 2020 (2)

- September 2020 (1)

- July 2020 (1)

- June 2020 (3)

- May 2020 (3)

- April 2020 (2)

- March 2020 (8)

- February 2020 (1)

- November 2019 (1)

- August 2019 (1)

- July 2019 (2)

- June 2019 (2)

- April 2019 (3)

- March 2019 (2)

- February 2019 (1)

- December 2018 (3)

- November 2018 (3)

- October 2018 (3)

- September 2018 (1)

- August 2018 (4)

- July 2018 (5)

- June 2018 (1)

- May 2018 (1)

- April 2018 (5)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (2)

- November 2017 (3)

- October 2017 (4)

- September 2017 (5)

- August 2017 (3)

- July 2017 (3)

- June 2017 (1)

- May 2017 (1)

- March 2017 (1)

- February 2017 (3)

- January 2017 (1)

- November 2016 (1)

- October 2016 (6)

- September 2016 (1)

- August 2016 (5)

- July 2016 (3)

- June 2016 (4)

- May 2016 (7)

- April 2016 (13)

- March 2016 (8)

- February 2016 (8)

- January 2016 (7)

- December 2015 (9)

- November 2015 (12)

- October 2015 (4)

- September 2015 (2)

- August 2015 (3)

- July 2015 (8)

- June 2015 (7)

- April 2015 (2)

- March 2015 (3)

- February 2015 (2)

- December 2014 (4)

- September 2014 (2)

- July 2014 (1)

- June 2014 (2)

- May 2014 (9)

- April 2014 (1)

- March 2014 (2)

- February 2014 (2)

- December 2013 (1)

- November 2013 (2)

- October 2013 (3)

- September 2013 (2)

- August 2013 (6)

- July 2013 (2)

- June 2013 (1)

- May 2013 (4)

- April 2013 (5)

- March 2013 (2)

- February 2013 (2)

- January 2013 (2)

- December 2012 (1)

- November 2012 (1)

- October 2012 (2)

- September 2012 (3)

- August 2012 (3)

- July 2012 (3)

- June 2012 (1)

- May 2012 (1)

- April 2012 (1)

- February 2012 (1)

- December 2011 (4)

- November 2011 (2)

- October 2011 (2)

- September 2011 (4)

- August 2011 (2)

- July 2011 (3)

- June 2011 (4)

- May 2011 (2)

- April 2011 (2)

- March 2011 (3)

- February 2011 (1)

- January 2011 (4)

- December 2010 (2)

- November 2010 (3)

- October 2010 (1)

- September 2010 (1)

- May 2010 (1)

- February 2010 (1)

- July 2009 (1)

- April 2009 (1)

- October 2008 (1)